Image via Wikipedia

By H. E. Puthoff

Quantum theory predicts, and experiments verify, that empty space (the vacuum) contains an enormous residual background energy known as zero-point energy (ZPE). Originally thought to be of significance only for such esoteric concerns as small perturbations to atomic emission processes, it is now known to play a role in large-scale phenomena of interest to technologists as well, such as the inhibition of spontaneous emission, the generation of short-range attractive forces (e.g., the Casimir force), and the possibility of accounting for sonoluminescence phenomena. ZPE topics of interest for spaceflight applications range from fundamental issues (where does inertia come from, can it be controlled?), through laboratory attempts toextract useful energy from vacuum fluctuations (can the ZPE be “mined” for practical use?), to scientifically grounded extrapolations concerning “engineering the vacuum” (is “warp-drive” space propulsion a scientific possibility?). Recent advances in research into the physics of the underlying ZPE indicate the possibility of potential application in all these areas of interest.

The concept “engineering the vacuum” was first introduced by Nobel Laureate T. D. Lee in his book Particle Physics and Introduction to Field Theory. As stated in Lee’s book: ” The experimental method to alter the properties of the vacuum may be called vacuum engineering…. If indeed we are able to alter the vacuum, then we may encounter some new phenomena, totally unexpected.” Recent experiments have indeed shown this to be the case.

With regard to space propulsion, the question of engineering the vacuum can be put succinctly: ” Can empty space itself provide the solution?” Surprisingly enough, there are hints that potential help may in fact emerge quite literally out of the vacuum of so-called ” empty space.” Quantum theory tells us that empty space is not truly empty, but rather is the seat of myriad energetic quantum processes that could have profound implications for future space travel. To understand these implications it will serve us to review briefly the historical development of the scientific view of what constitutes empty space.

At the time of the Greek philosophers, Democritus argued that empty space was truly a void, otherwise there would not be room for the motion of atoms. Aristotle, on the other hand, argued equally forcefully that what appeared to be empty space was in fact a plenum (a background filled with substance), for did not heat and light travel from place to place as if carried by some kind of medium? The argument went back and forth through the centuries until finally codified by Maxwell’s theory of the luminiferous ether, a plenum that carried electromagnetic waves, including light, much as water carries waves across its surface. Attempts to measure the properties of this ether, or to measure the Earth’s velocity through the ether (as in the Michelson-Morley experiment), however, met with failure. With the rise of special relativity which did not require reference to such an underlying substrate, Einstein in 1905 effectively banished the ether in favor of the concept that empty space constitutes a true void. Ten years later, however, Einstein’s own development of the general theory of relativity with its concept of curved space and distorted geometry forced him to reverse his stand and opt for a richly-endowed plenum, under the new label spacetime metric.

It was the advent of modern quantum theory, however, that established the quantum vacuum, so-called empty space, as a very active place, with particlesarising and disappearing, a virtual plasma, and fields continuously fluctuatingabout their zero baseline values. The energy associated with such processes iscalled zero-point energy (ZPE), reflecting the fact that such activity remains even at absolute zero.

The Vacuum As A Potential Energy Source

At its most fundamental level, we now recognize that the quantum vacuum is an enormous reservoir of untapped energy, with energy densities conservatively estimated by Feynman and Hibbs to be on the order of nuclear energy densities or greater. Therefore, the question is, can the ZPE be “mined” for practical use? If so, it would constitute a virtually ubiquitous energy supply, a veritable “Holy Grail” energy source for space propulsion.

As utopian as such a possibility may seem, physicist Robert Forward at Hughes Research Laboratories demonstrated proof-of-principle in apaper, “Extracting Electrical Energy from the Vacuum by Cohesion of Charged Foliated Conductors.” Forward’s approach exploited a phenomenon called the Casimir Effect, an attractive quantum force between closely-spaced metal plates, named for its discoverer, H. G. B. Casimir of Philips Laboratories in the Netherlands. The Casimir force, recently measured with high accuracy by S. K. Lamoreaux at the University of Washington, derives from partial shielding of the interior region of the plates from the background zero-point fluctuations of the vacuum electromagnetic field. As shown by Los Alamos theorists Milonni et al., this shielding results in the plates being pushed together by the unbalanced ZPE radiation pressures. The result is a corollary conversion of vacuum energy to some other form such as heat. Proof that such a process violates neither energy nor thermodynamic constraints can be found in a paper by a colleague and myself (Cole & Puthoff ) under the title “Extracting Energy and Heat from the Vacuum.”

As utopian as such a possibility may seem, physicist Robert Forward at Hughes Research Laboratories demonstrated proof-of-principle in apaper, “Extracting Electrical Energy from the Vacuum by Cohesion of Charged Foliated Conductors.” Forward’s approach exploited a phenomenon called the Casimir Effect, an attractive quantum force between closely-spaced metal plates, named for its discoverer, H. G. B. Casimir of Philips Laboratories in the Netherlands. The Casimir force, recently measured with high accuracy by S. K. Lamoreaux at the University of Washington, derives from partial shielding of the interior region of the plates from the background zero-point fluctuations of the vacuum electromagnetic field. As shown by Los Alamos theorists Milonni et al., this shielding results in the plates being pushed together by the unbalanced ZPE radiation pressures. The result is a corollary conversion of vacuum energy to some other form such as heat. Proof that such a process violates neither energy nor thermodynamic constraints can be found in a paper by a colleague and myself (Cole & Puthoff ) under the title “Extracting Energy and Heat from the Vacuum.”

Attempts to harness the Casimir and related effects for vacuum energy conversion are ongoing in our laboratory and elsewhere. The fact that its potential application to space propulsion has not gone unnoticed by the Air Force can be seen in its request for proposals for the FY-1986 Defense SBIR Program. Under entry AF86-77, Air Force Rocket Propulsion Laboratory (AFRPL), Topic: Non-Conventional Propulsion Concepts we f ind the statement: ” Bold,new non-conventional propulsion concepts are solicited…. The specific area sin which AFRPL is interested include…. (6) Esoteric energy sources for propulsion including the zero point quantum dynamic energy of vacuum space.”

Several experimental formats for tapping the ZPE for practical use are under investigation in our laboratory. An early one of interest is based on the idea of a Casimir pinch effect in non-neutral plasmas, basically a plasma equivalent of Forward’s electromechanical charged-plate collapse. The underlying physics is described in a paper submitted for publication by myself and a colleague, and it is illustrative that the first of several patents issued to a consultant to our laboratory, K. R. Shoulders(1991), contains the descriptive phrase ” …energy is provided… and the ultimate source of this energy appears to be the zero-point radiation of the vacuum continuum.” Another intriguing possibility is provided by the phenomenon of sonoluminescence, bubble collapse in an ultrasonically-driven fluid which is accompanied by intense, sub-nanosecond light radiation. Although the jury is still out as to the mechanism of light generation, Nobelist Julian Schwinger (1993) has argued for a Casimir interpretation. Possibly related experimental evidence for excess heat generation in ultrasonically-driven cavitation in heavy water is claimed in an EPRI Report by E-Quest Sciences ,although attributed to a nuclear micro-fusion process. Work is under way in our laboratory to see if this claim can be replicated.

Yet another proposal for ZPE extraction is described in a recent patent(Mead & Nachamkin, 1996). The approach proposes the use of resonant dielectric spheres, slightly detuned from each other, to provide a beat-frequency downshift of the more energetic high-frequency components of the ZPE to a more easily captured form. We are discussing the possibility of a collaborative effort between us to determine whether such an approach is feasible. Finally, an approach utilizing micro-cavity techniques to perturb the ground state stability of atomic hydrogen is under consideration in our lab. It is based on a paper of mine (Puthoff, 1987) in which I put forth the hypothesis that then on radiative nature of the ground state is due to a dynamic equilibrium in which radiation emitted due to accelerated electron ground state motion is compensated by absorption from the ZPE. If this hypothesis is true, there exists the potential for energy generation by the application of the techniques of so-called cavity quantum electrodynamics(QED). In cavity QED, excited atoms are passed through Casimir-like cavities whose structure suppresses electromagnetic cavity modes at the transition frequency between the atom’s excited and ground states. The result is that the so-called “spontaneous” emission time is lengthened considerably (for example, by factors of ten), simply because spontaneous emission is not so spontaneous after all, but rather is driven by vacuum fluctuations. Eliminate the modes, and you eliminate the zero point fluctuations of the modes, hence suppressing decay of the excited state. As stated in a review article on cavity QED in Scientific American, “An excited atom that would ordinarily emit a low-frequency photon can not do so, because there are no vacuum fluctuations to stimulate its emission….In its application to energy generation, mode suppression would be used to perturb the hypothesized dynamic ground state absorption/emission balance to lead to energy release.

An example in which Nature herself may have taken advantage of energetic vacuum effects is discussed in a model published by ZPE colleagues A. Rueda of California State University at Long Beach, B. Haisch of Lockheed-Martin,and D. Cole of IBM (1995). In a paper published in the Astrophysical Journal,they propose that the vast reaches of outer space constitute an ideal environment for ZPE acceleration of nuclei and thus provide a mechanism for “powering up” cosmic rays. Details of the model would appear to account for other observed phenomena as well, such as the formation of cosmic voids. This raises the possibility of utilizing a ” sub-cosmic-ray” approach to accelerate protons in a cryogenically-cooled, collision-free vacuum trap and thus extract energy from the vacuum fluctuations by this mechanism.

The Vacuum as the Source of Gravity and Inertia

What of the fundamental forces of gravity and inertia that we seek to overcome in space travel? We have phenomenological theories that describe their effects(Newton’s Laws and their relativistic generalizations), but what of their origins?

The first hint that these phenomena might themselves be traceable to roots in the underlying fluctuations of the vacuum came in a study published by the well-known Russian physicist Andrei Sakharov. Searching to derive Einstein’s phenomenological equations for general relativity from a more fundamental set of assumptions, Sakharov came to the conclusion that the entire panoply of general relativistic phenomena could be seen as induced effects brought about by changes in the quantum-fluctuation energy of the vacuum due to the presence of matter. In this view the attractive gravitational force is more akin to the induced Casimir force discussed above, than to the fundamental inverse square law Coulomb force between charged particles with which it is often compared. Although speculative when first introduced by Sakharov this hypothesis has led to a rich and ongoing literature, including contributions of my own on quantum-fluctuation-induced gravity, aliterature that continues to yield deep insight into the role played by vacuum forces.

Given an apparent deep connection between gravity and the zero-point fluctuations of the vacuum, a similar connection must exist between these self same vacuum fluctuations and inertia. This is because it is an empirical fact that the gravitational and inertial masses have the same value, even though the underlying phenomena are quite disparate. Why, for example, should a measure of the resistance of a body to being accelerated, even if far from any gravitational field, have the same value that is associated with the gravitational attraction between bodies? Indeed, if one is determined by vacuum fluctuations, so must the other. To get to the heart of inertia, consider a specific example in which you are standing on a train in the station. As the train leaves the platform with a jolt, you could be thrown to the floor. What is this force that knocks you down,seemingly coming out of nowhere? This phenomenon, which we conveniently label inertia and go on about our physics, is a subtle feature of the universe that has perplexed generations of physicists from Newton to Einstein. Since in this example the sudden disquieting imbalance results from acceleration “relative to the fixed stars,” in its most provocative form one could say that it was the “stars” that delivered the punch. This key feature was emphasized by the Austrian philosopher of science Ernst Mach, and is now known as Mach’s Principle. Nonetheless, the mechanism by which the stars might do this deed has eluded convincing explication.

Given an apparent deep connection between gravity and the zero-point fluctuations of the vacuum, a similar connection must exist between these self same vacuum fluctuations and inertia. This is because it is an empirical fact that the gravitational and inertial masses have the same value, even though the underlying phenomena are quite disparate. Why, for example, should a measure of the resistance of a body to being accelerated, even if far from any gravitational field, have the same value that is associated with the gravitational attraction between bodies? Indeed, if one is determined by vacuum fluctuations, so must the other. To get to the heart of inertia, consider a specific example in which you are standing on a train in the station. As the train leaves the platform with a jolt, you could be thrown to the floor. What is this force that knocks you down,seemingly coming out of nowhere? This phenomenon, which we conveniently label inertia and go on about our physics, is a subtle feature of the universe that has perplexed generations of physicists from Newton to Einstein. Since in this example the sudden disquieting imbalance results from acceleration “relative to the fixed stars,” in its most provocative form one could say that it was the “stars” that delivered the punch. This key feature was emphasized by the Austrian philosopher of science Ernst Mach, and is now known as Mach’s Principle. Nonetheless, the mechanism by which the stars might do this deed has eluded convincing explication.

Addressing this issue in a paper entitled “Inertia as a Zero-Point Field Lorentz Force,” my colleagues and I (Haisch, Rueda & Puthoff, 1994) were successful in tracing the problem of inertia and its connection to Mach’s Principle to the ZPE properties of the vacuum. In a sentence, although a uniformly moving body does not experience a drag force from the (Lorentz-invariant)vacuum fluctuations, an accelerated body meets a resistance (force) proportional to the acceleration. By accelerated we mean, of course, accelerated relative to the fixed stars. It turns out that an argument can be made that the quantum fluctuations of distant matter structure the local vacuum-fluctuation frame of reference. Thus, in the example of the train the punch was delivered by the wall of vacuum fluctuations acting as a proxy for the fixed stars through which one attempted to accelerate.

The implication for space travel is this: Given the evidence generated in the field of cavity QED (discussed above), there is experimental evidence that vacuum fluctuations can be altered by technological means. This leads to the corollary that, in principle, gravitational and inertial masses can also be altered. The possibility of altering mass with a view to easing the energy burden of future spaceships has been seriously considered by the Advanced Concepts Office of the Propulsion Directorate of the Phillips Laboratory at Edwards AirForce Base. Gravity researcher Robert Forward accepted an assignment to review this concept. His deliverable product was to recommend a broad, multipronged ef fort involving laboratories from around the world to investigate the inertia model experimentally. The Abstract reads in part:

Many researchers see the vacuum as a central ingredient of 21st-Century physics ….Some even believe the vacuum may be harnessed to provide a limitless supply of energy. This report summarizes an attempt to find an experiment that would test the Haisch,Rueda and Puthoff (HRP) conjecture that the mass and inertia of a body are induced effects brought about by changes in the quantum-fluctuation energy of the vacuum…. It was possible to find an experiment that might be able to prove or disprove that the inertial mass of a body can be altered by making changes in the vacuum surrounding the body.

With regard to action items, Forward in fact recommends a ranked list of not one but four experiments to be carried out to address the ZPE-inertia conceptand its broad implications. The recommendations included investigation of the proposed “sub-cosmic-ray energy device” mentioned earlier, and the investigation of an hypothesized “inertia-wind” effect proposed by our laboratory and possibly detected in early experimental work,though the latter possibility is highly speculative at this point.

Engineering the Vacuum For “Warp Drive”

Perhaps one of the most speculative, but nonetheless scientifically-grounded, proposals of all is the so-called Alcubierre Warp Drive. Taking on the challenge of determining whether Warp Drive a là Star Trek was a scientific possibility, general relativity theorist Miguel Alcubierre of the University of Wales set himself the task of determining whether faster-than light travel was possible within the constraints of standard theory. Although such clearly could not be the case in the flat space of special relativity, general relativity permits consideration of altered space time metrics where such a possibility is not a priori ruled out. Alcubierre ’s further self-imposed constraints on an acceptable solution included the requirements that no net time distortion should occur (breakfast on Earth, lunch on Alpha Centauri, and home for dinner with your wife and children, not your great-great-great grandchildren), and that the occupants of the spaceship were not to be flattened against the bulkhead by unconscionable accelerations.

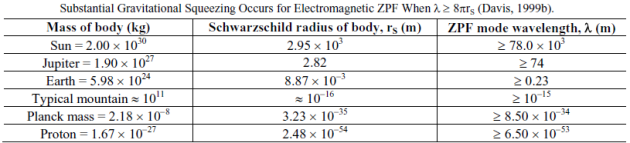

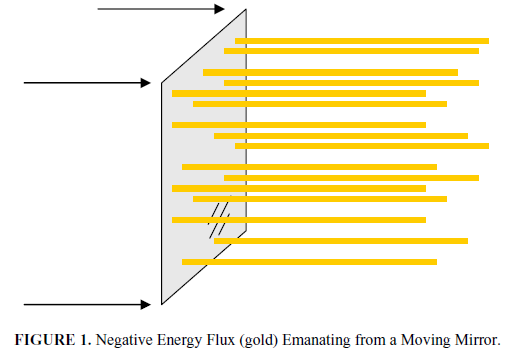

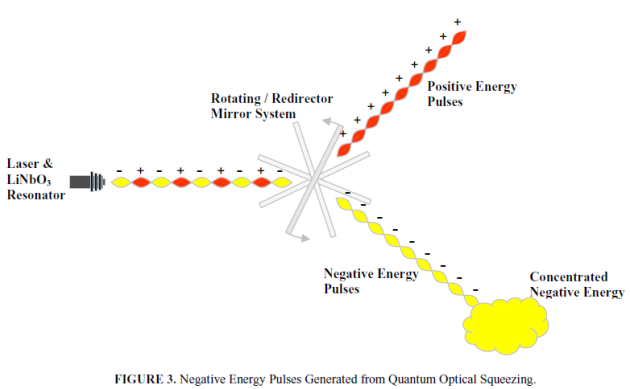

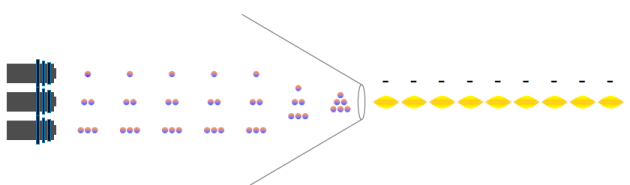

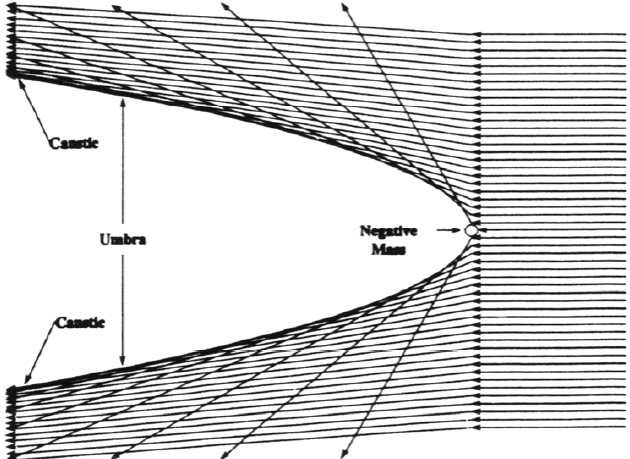

A solution meeting all of the above requirements was found and published by Alcubierre in Classical and Quantum Gravity in 1994. The solution discovered by Alcubierre involved the creation of a local distortion of space time such that space time is expanded behind the spaceship, contracted ahead of it, and yields a hypersurfer-like motion faster than the speed of light as seen by observers outside the disturbed region. In essence, on the outgoing leg of its journey the spaceship is pushed away from Earth and pulled towards its distant destination by the engineered local expansion of space time itself. For followup on the broader aspects of “metric engineering” concepts, one can refer to apaper published by myself in Physics Essays (Puthoff, 1996). Interestingly enough, the engineering requirements rely on the generation of macroscopic,negative-energy-density, Casimir-like states in the quantum vacuum of the type discussed earlier. Unfortunately,meeting such requirements is beyond technological reach without some unforeseen breakthrough.

Related, of course, is the knowledge that general relativity permits the possibility of wormholes, topological tunnels which in principle could connect distant parts of the universe, a cosmic subway so to speak. Publishing in the American Journal of Physics, theorists Morris and Thorne initially outlined in some detail the requirements for traversible wormholes and have found that, in principle, the possibility exists provided one has access to Casimir-like, negative-energy-density quantum vacuum states. This has led to a rich literature, summarized recently in a book by Matt Visser of Washington University. Again, the technological requirements appear out of reach for the foreseeable future, perhaps awaiting new techniques for cohering the ZPE vacuum fluctuations in order to meet the energy-density requirements.

Where does this leave us? As we peer into the heavens from the depth of our gravity well, hoping for some “magic” solution that will launch our spacefarers first to the planets and then to the stars, we are reminded of Arthur C. Clarke’s phrase that highly-advanced technology is essentially indistinguishable from magic. Fortunately, such magic appears to be waiting in the wings of our deepening understanding of the quantum vacuum in which we live.

[Ad: Can the Vacuum Be Engineered for Space Flight Applications? Overview of Theory and Experiments By H. E. Puthoff(PDF)]

Scientists have apparently broken the universe’s speed limit. For generations, physicists believed there is nothing faster than light moving through a vacuum – a speed of 186,000 miles per second. But in an experiment in Princeton, N.J., physicists sent a pulse of laser light through cesium vapor so quickly that it left the chamber before it had even finished entering. The pulse traveled 310 times the distance it would have covered if the chamber had contained a vacuum.

Scientists have apparently broken the universe’s speed limit. For generations, physicists believed there is nothing faster than light moving through a vacuum – a speed of 186,000 miles per second. But in an experiment in Princeton, N.J., physicists sent a pulse of laser light through cesium vapor so quickly that it left the chamber before it had even finished entering. The pulse traveled 310 times the distance it would have covered if the chamber had contained a vacuum.

.jpg)

.jpg)

To get the highest resolution from the scanning tunneling microscope, the system was then cooled to a few degrees above absolute zero. Both the graphene and the platinum contracted – but the platinum shrank more, with the result that excess graphene pushed up into bubbles, measuring four to 10 nanometers (billionths of a meter) across and from a third to more than two nanometers high. To confirm that the experimental observations were consistent with theoretical predictions, Castro Neto worked with Guinea to model a nanobubble typical of those found by the Crommie group. The resulting theoretical picture was a near-match to what the experimenters had observed: a strain-induced pseudo-magnetic field some 200 to 400 tesla strong in the regions of greatest strain, for nanobubbles of the correct size.

To get the highest resolution from the scanning tunneling microscope, the system was then cooled to a few degrees above absolute zero. Both the graphene and the platinum contracted – but the platinum shrank more, with the result that excess graphene pushed up into bubbles, measuring four to 10 nanometers (billionths of a meter) across and from a third to more than two nanometers high. To confirm that the experimental observations were consistent with theoretical predictions, Castro Neto worked with Guinea to model a nanobubble typical of those found by the Crommie group. The resulting theoretical picture was a near-match to what the experimenters had observed: a strain-induced pseudo-magnetic field some 200 to 400 tesla strong in the regions of greatest strain, for nanobubbles of the correct size.